This Technology May Slash AI Energy Use by 1,000x – Arriving Years Before Oklo’s First Reactor

Investors have poured $45 billion into zero-revenue nuclear startups like , betting AI data centers will drive insatiable power demand for decades. But a convergence of energy-efficient computing technologies already demonstrating 100-1,000x efficiency gains in laboratories threatens to demolish this investment thesis before the first small modular reactor (SMR) comes online.

These alternative chips are entering commercial deployment between 2025-2028, while nuclear startups target first revenue in 2028-2030. By the time Oklo’s inaugural reactor might begin operations, the computing landscape could have shifted completely toward architecture requiring a fraction of current power density.

The Efficiency Revolution Nobody’s Pricing In

While quantum computing won’t impact AI workloads in the relevant timeframe (facing barriers like data loading bottlenecks and requiring fault-tolerant systems not expected until the 2030s-2040s), four alternative technologies are delivering dramatic energy reductions today:

Neuromorphic Computing: 100x Energy Gains Demonstrated

Brain-inspired spiking neural networks are achieving efficiency breakthroughs in production environments. A 2024 Nature Communications study showed neuromorphic circuits using 2D material tunnel FETs achieving two orders of magnitude higher energy efficiency compared to 7nm CMOS baselines. Memristor-based systems learned to play Atari Pong with 100x lower energy than equivalent GPU implementations.

Photonic Computing: 1,000x Reduction Without Quantum

MIT researchers demonstrated a fully integrated photonic processor completing machine-learning classification in under half a nanosecond while achieving 92% accuracy. Photonic chips could reduce energy required for AI training by up to 1,000 times compared to conventional processors. Unlike GPUs requiring costly cooling systems, photonic chips don’t generate significant heat, leading to further operational savings.

Current photonic systems are “50x faster, 30x more efficient” for specific AI inference tasks, with commercial prototypes demonstrated in 2024 and production scaling targeted for 2026-2028.

Application-Specific Integrated Circuits: 100-1,000x Gains

AI accelerators designed for specific tasks are “anywhere from 100 to 1,000 times more energy efficient than power-hungry GPUs,” according to IBM research. Google’s Tensor Processing Units (TPUs) already deliver 2-4x greater energy efficiency than leading GPUs for AI-specific inference and training, and they’re widely deployed today.

Computational RAM: 2,500x Energy Improvements

University of Minnesota researchers developed Computational Random-Access Memory (CRAM) placing processing within memory cells themselves. In MNIST handwritten digit classification tests, CRAM proved 2,500 times more energy-efficient and 1,700 times faster than near-memory processing systems. This addresses the problem that data transfer between memory and processors consumes as much as 200 times the energy used in actual computation.

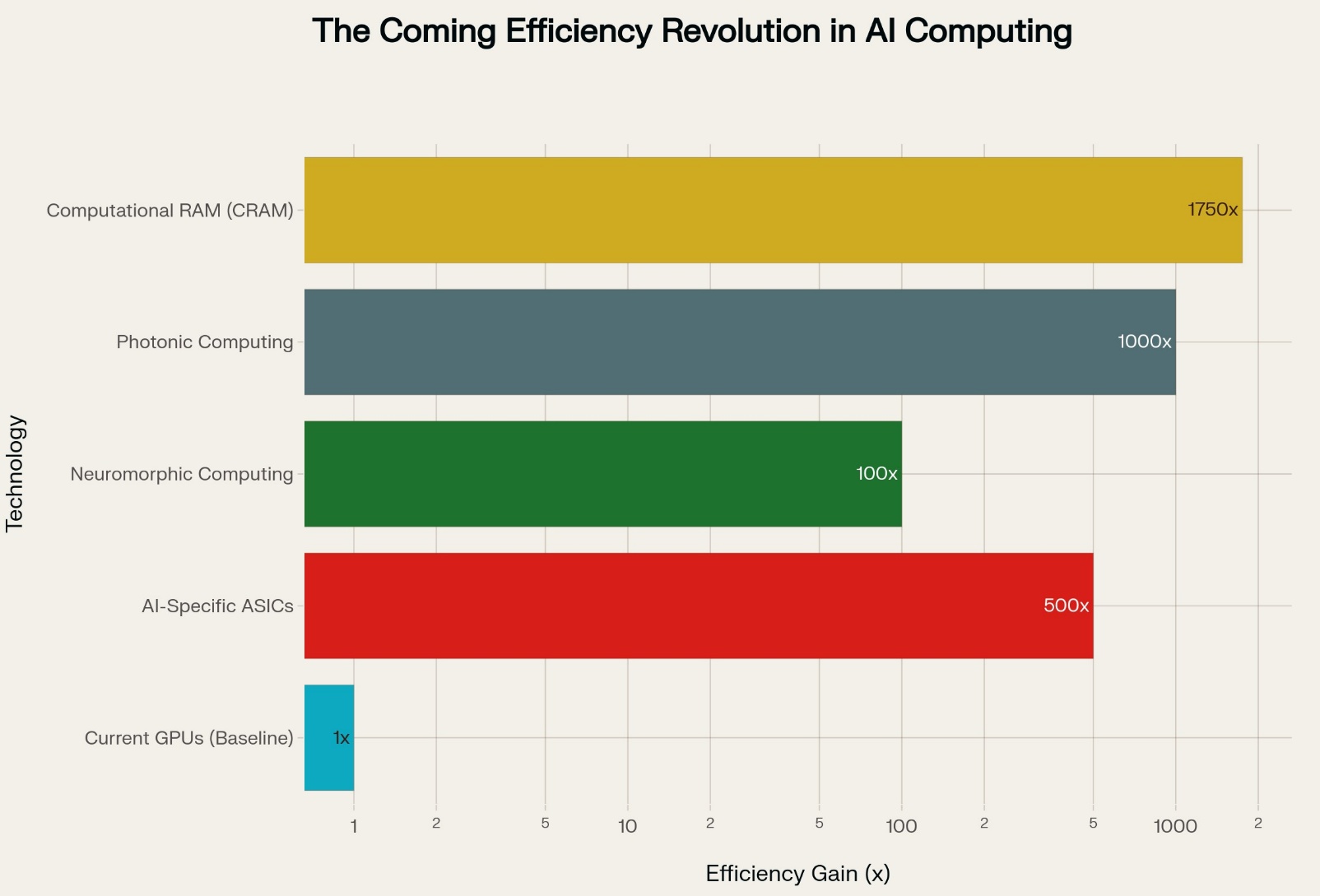

Energy efficiency gains of emerging computing technologies compared to current GPU baseline

Emerging computing technologies demonstrate 100x to 1,750x energy efficiency gains over current GPUs: neuromorphic chips at 100x, photonic computing at 1,000x, and CRAM systems reaching 1,750x improvements. If just 20-30% of AI workloads shift to these technologies by 2030, the exponential power demand nuclear startups are betting on could evaporate.

The Demand Plateau Scenario

The nuclear investment thesis assumes AI data center power demand will grow exponentially for decades. Multiple factors suggest this assumption is flawed:

Efficiency Gains Outpacing Demand: While AI compute demand grew explosively over the past decade, advances in AI hardware accelerators enabled only a 6% increase in global data center energy consumption despite explosive growth in computing capacity. The next decade’s hardware advances could be even more dramatic.

Training vs. Inference Shift: Model deployment (inference) accounts for 60-70% of AI lifecycle energy consumption, not training. As models mature, this ratio shifts further toward inference, exactly where specialized accelerators and photonic chips excel.

Peak AI Workload Scenario: Goldman Sachs projects U.S. data center power demand reaching 123 GW by 2035, growing 165% from 2024 levels. But these forecasts assume current GPU-based architecture dominates. If even 20-30% of workloads shift to neuromorphic, photonic, or specialized ASICs by 2030-2035, demand growth could be 50-70% lower than projected.

The Commercial Timeline Mismatch

Nuclear startups promise first revenue between 2027-2030:

- Oklo: First revenue 2028

- NuScale: “Substantial revenue” 2028+

- Industry consensus: 2027-2030 for first commercial SMR operations

Alternative computing technologies are arriving simultaneously:

- Neuromorphic systems: Already commercially deployed in research settings, scaling 2025-2027

- Photonic chips: Commercial prototypes demonstrated 2024, production scaling 2026-2028

- AI-specific ASICs: Already widely deployed, next-generation chips arriving 2025-2026

- CRAM technology: Patent applications filed, semiconductor partnerships forming for “large-scale demonstrations”

By the time Oklo’s first reactor begins operations (2028-2030), data center operators could have shifted toward architectures requiring 10-100x less power.

Data Center Economics Drive Adoption

Data center operators face intense economic pressure to adopt energy-efficient alternatives. Cooling alone accounts for 30-40% of operating costs, while power consumption represents the single largest operational expense for AI data centers.

A facility using photonic chips consuming 1/1000th the power of GPUs, or neuromorphic chips using 1/100th, gains overwhelming cost advantages over competitors locked into GPU infrastructure. Microsoft, Google, Amazon, and Meta are hedging across multiple approaches through quantum partnerships, photonic research, custom Inferentia chips, and AI-specific silicon.

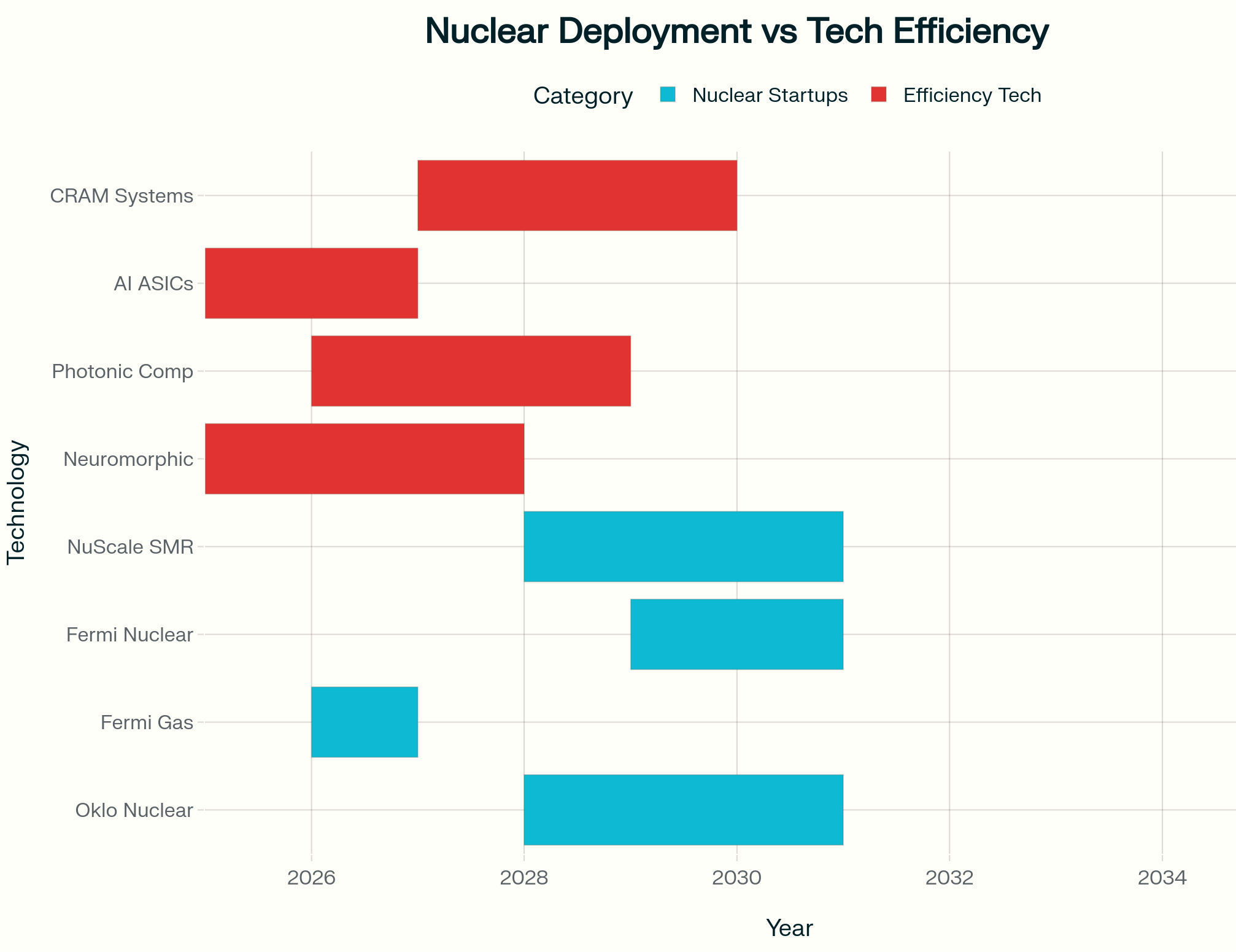

Timeline comparison: Nuclear startup deployment schedules versus energy-efficient computing technology availability

Nuclear startups won’t deliver first operations until 2028-2030, but competing efficiency technologies arrive simultaneously or earlier. AI ASICs already deployed, neuromorphic systems scaling 2025-2027, photonic chips reaching production 2026-2028. By the time these reactors begin operations, data centers will have adopted technologies delivering 100-1,000x power reductions, solving the problem nuclear was meant to address.

The Compound Risk: Stranded Assets

Nuclear energy startups face compounding risks across multiple dimensions:

- Technology Risk: Unproven SMR designs, uncertain licensing timelines, fuel supply constraints

- Regulatory Risk: 2-5 year minimum NRC approval processes, no U.S. SMR licenses granted to date

- Infrastructure Risk: 5-7 year grid connection waits

- Demand Risk: The assumption of exponentially growing GPU-based AI power consumption may be flawed

The fourth risk (demand evaporation) is the one nobody’s pricing in.

Imagine Oklo brings its first reactor online in 2030. By then:

- Neuromorphic chips have captured 15-20% of AI inference workloads at 100x efficiency

- Photonic accelerators handle another 10-15% of compute-intensive tasks at 1,000x efficiency

- ASIC and TPU improvements have doubled GPU efficiency baseline

- Net effect: AI data center power demand 40-50% lower than 2025 projections assumed

As a result, massive overcapacity in power generation. Traditional utilities with existing nuclear fleets can easily meet demand. Speculative startups with $20+ billion market caps but zero contracted revenue face the same fate as EV startups when demand didn’t materialize.

Investment Implications

Established utilities with diversified generation portfolios can respond flexibly to multiple demand scenarios. operating 21 reactors today has diversified its business model across technology paradigms.

Speculative nuclear startups with $26 billion valuations and zero revenue are making all-or-nothing bets that SMR technology works at commercial scale, regulatory approval happens on schedule, fuel supply scales adequately, grid connections materialize, and (most critically) AI power demand grows exponentially on GPU-based architecture for 20-30 years.

That fifth assumption is now the weakest link, and investors aren’t scrutinizing it.

Rather than betting on speculative nuclear to power inefficient GPUs, consider:

Direct Efficiency Investments:

- Neuromorphic computing startups (Intel’s neuromorphic chips, BrainChip, SynSense)

- Photonic computing companies (Lightmatter, Luminous Computing)

- AI accelerator manufacturers (Cerebras, Graphcore, Groq)

Diversified Infrastructure:

- Utilities with mixed generation portfolios adaptable to multiple demand scenarios

- Data center REITs investing in flexible, multi-architecture facilities

Hedged Energy Exposure:

- Avoid pure-play SMR startups entirely

- If nuclear exposure desired, favor established fuel cycle companies (Centrus, Cameco) or utilities actually operating reactors today

The Final Takeaway

Computing history teaches a consistent lesson: efficiency gains eventually outpace raw performance scaling. The shift from vacuum tubes to transistors, from discrete components to integrated circuits, from CPUs to GPUs. Each transition delivered orders-of-magnitude efficiency improvements that reshaped infrastructure requirements.

Neuromorphic, photonic, and specialized accelerators are demonstrating 100-1,000x efficiency gains in laboratories and early commercial deployments today. Nuclear startups are betting $26 billion market caps that GPU-based AI infrastructure will dominate through 2040-2050.

That bet looked reasonable in 2023. In late 2025, with photonic chips completing AI tasks in half a nanosecond, neuromorphic systems running at 100x GPU efficiency, and CRAM demonstrating 2,500x improvements, that carries considerable risk.

Betting billions on infrastructure to power today’s inefficient paradigm while efficiency gains of 100-1,000x are on the horizon echoes the railroad investors of 1900 backing steam engines just as diesel-electric locomotives were emerging.

The AI energy bubble’s collapse may not come from AI winter or nuclear technology failures. It may come from something simpler: engineers may solve the efficiency problem faster than investors expected.